Smarter Imaging: AI Meets Automation in Cell Microscopy

ibidi Blog |November 20, 2025 | Abhishek Derle, ibidi GmbH

In recent years, the combination of automation and artificial intelligence has reshaped cell imaging. Automation arrived first. It changed the day-to-day reality of imaging by automating repetitive work and standardizing acquisition on a large scale. Robotic stages keep hundreds of wells in reliable focus. Acquisition software applies consistent exposure and illumination from plate to plate. Incubation systems maintain a steady temperature and CO₂ level, allowing live cells to behave naturally. The result is clean, repeatable images with far less hands-on effort and far fewer sources of variability.

Artificial intelligence then became the next layer. It turns large image sets into quantitative answers by detecting cells and organelles, and measuring intensity, texture, shape, and movement across channels and time. Recent advances in machine learning, especially deep learning, enable models to find subtle, hidden structures in both simple and complex imaging data [1]. Deep learning models learn phenotypes directly from data, distinguishing between healthy and stressed cells, grouping compounds by their effects, and identifying surface patterns that are easily missed by the human eye. The payoff is speed, objectivity, and depth, with models applying the same criteria to every frame.

Together, automation and AI have given rise to a new approach to microscopy known as high-content imaging. By combining automated acquisition with intelligent analysis, researchers can move beyond simple snapshots to generate rich, multidimensional datasets that describe how cells behave, interact, and respond to their environment [2].

What Is High Content Imaging?

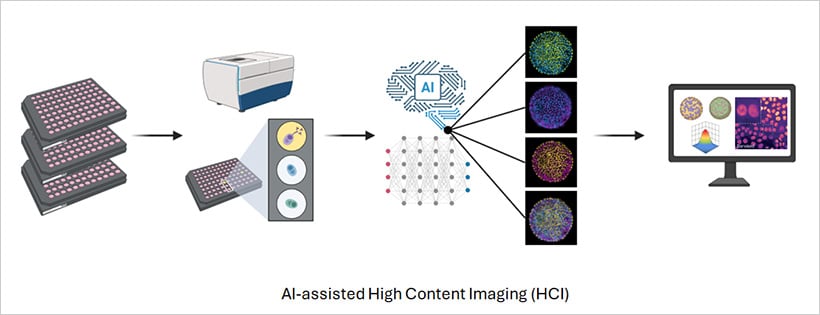

High-content imaging (HCI) combines automation and AI together in a single workflow. Automated microscopes capture thousands of images across multiwell plates, while analysis pipelines extract detailed information from every cell in every well. Rather than relying on a single readout, HCI measures a wide range of parameters, including morphology, fluorescence intensity, protein localization, and organelle behavior.

Because the workflow is automated, every well is imaged under consistent conditions. Advanced software maintains focus, exposure, and illumination, ensuring that the resulting datasets are both reproducible and comparable. Modern automated microscopes equipped with fluorescence and confocal imaging, combined with AI analysis software, enhance the throughput and accuracy of phenotypic assays, making large-scale screens faster and more reliable than ever [3]. The outcome is a precise phenotypic map that captures how cells respond to drugs, stimuli, or genetic changes across space and time.

Fig. 1 | Schematic overview of a high-content imaging experiment.

To support this workflow, imaging-grade labware plays a crucial role. ibidi’s µ-Plate 96 Well 3D, µ-Plate 96 Well Square, µ-Plate 96 Well Round, and µ-Plate 384 Well Glass Bottom are specifically designed for high-throughput microscopy. The #1.5 Polymer or #1.5H Glass Coverslip Bottoms provide the optical clarity required for fluorescence and confocal imaging, while the black-walled design minimizes crosstalk between wells. These features ensure that every image captured is consistent in quality and ready for AI-based analysis.

The Benefits of Combining Automation and AI

The power of combining automation and AI lies in how these technologies complement each other. Automation generates large, consistent datasets, and AI extracts meaning from them. Together, they accelerate discovery, improve reproducibility, and expand the boundaries of what can be measured.

Scale and Speed

Automated imaging systems can process entire multiwell plates in minutes, capturing thousands of images without human input. Some setups can image forty 96-well plates in only two hours. This scale allows researchers to explore full compound libraries, large genetic screens, or time-lapse experiments that would be impossible to perform manually. Automation makes high-throughput imaging both efficient and routine, freeing researchers to focus on interpretation rather than acquisition.

Depth of Insight

AI extracts hundreds of parameters from each image, revealing subtle morphological or molecular changes that evolve over time. Deep learning, especially convolutional neural networks (CNNs), has become a cornerstone of image analysis [4]. These models learn phenotypes directly from data, recognize differences between healthy and stressed cells, classify compound effects based on phenotypic profiles, and uncover new cellular states that may not be visible to the naked eye [5]. Once trained, the same model can analyze new datasets in minutes, performing work that would take a human analyst days or weeks. This turns microscopy from a descriptive tool into a rich, quantitative platform for understanding cellular behavior.

Reproducibility and Objectivity

Automation ensures identical imaging conditions. AI applies the same objective rules to every frame, reducing bias and increasing reproducibility across plates, instruments, and sites. This consistency is essential for large-scale, multi-site experiments, helping to turn imaging into a truly quantitative discipline.

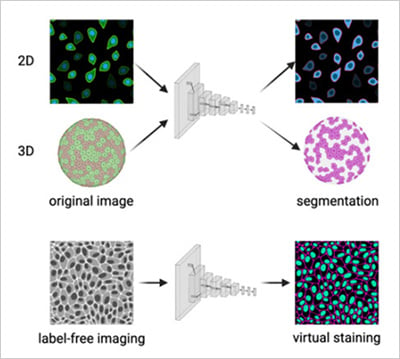

Compatibility with 3D Models

As biology advances toward more realistic models, AI and automation enable the study of 3D spheroids, organoids, and co-cultures with the same precision as 2D systems [6] [7]. Automated microscopes maintain focus through thick samples, while AI algorithms reconstruct and quantify 3D structures over time (Fig. 2a). In patient-derived organoid studies, high-content imaging combined with AI has already helped predict how individual tumors respond to specific drugs, paving the way for data-driven precision medicine [8].

The ibidi µ-Plate 96 Well 3D supports uniform spheroid formation and stable imaging over long time courses, providing a reliable platform for quantitative high-content imaging of complex models.

Fig. 2 | a) Convolutional neural network performing cell segmentation in 2D and 3D microscopy datasets. b) Convolutional neural network predicting fluorescence channels from label-free brightfield images (virtual staining). Adapted from Carreras-Puigvert, J., et al., 2024 [11].

New Discoveries and Predictive Insights

AI’s ability to detect subtle patterns enables new kinds of biological discovery. Deep learning can group compounds by mechanism of action, reveal off-target effects, and identify early markers of disease. In live-cell imaging, AI now tracks cell movement, division, and shape changes in real time, revealing how cells respond dynamically to drugs or genetic perturbations. This has made time-lapse imaging a central component of high-content screening, providing insight into the kinetics of cellular responses [9] [10].

To maintain the stability and reproducibility of long-term live-cell experiments, ibidi Stage Top Incubators offer precise control of temperature, humidity, CO₂, and O₂ directly on the microscope stage. This ensures optimal cell health and consistent imaging conditions throughout extended time-lapse studies.

At the same time, newer generative AI models are being explored for predictive analysis, simulating cellular responses or suggesting new experimental conditions based on existing imaging data (Fig. 2b).

The Future of Intelligent Imaging

The convergence of AI, automation, and advanced microscopy is redefining what scientists can learn from images. In the near future, AI will not only analyze data but also guide microscopes in real time, adjusting focus, identifying key events, or triggering higher-resolution imaging when needed.

Fully integrated imaging pipelines that connect sample preparation, image acquisition, analysis, and visualization are already becoming a reality. As these technologies continue to advance, they will enable faster, more accurate, and predictive imaging, allowing researchers to measure and understand cellular processes at unprecedented depth and scale.

For scientists, this means less time spent on repetitive work and more time spent interpreting biological data. And for cell imaging itself, it marks a shift from simply observing life to understanding it through the combined power of automation and artificial intelligence.

References

- Wong, F., et al., Discovery of a structural class of antibiotics with explainable deep learning. Nature, 2024. 626(7997): p. 177–185.

- Way, G.P., et al., Evolution and impact of high content imaging. SLAS Discovery, 2023. 28(7): p. 292–305.

- Kupczyk, E., et al., Unleashing high content screening in hit detection – Benchmarking AI workflows including novelty detection. Computational and Structural Biotechnology Journal, 2022. 20: p. 5453–5465.

- Gupta, A., et al., Deep Learning in Image Cytometry: A Review. Cytometry Part A, 2019. 95(4): p. 366–380.

- Pachitariu, M. and C. Stringer, Cellpose 2.0: how to train your own model. Nature Methods, 2022. 19(12): p. 1634–1641.

- Choo, N., et al., High-Throughput Imaging Assay for Drug Screening of 3D Prostate Cancer Organoids. SLAS Discovery, 2021. 26(9): p. 1107–1124.

- Betge, J., et al., The drug-induced phenotypic landscape of colorectal cancer organoids. Nature Communications, 2022. 13(1): p. 3135.

- Jiménez-Luna, J., et al., Artificial intelligence in drug discovery: recent advances and future perspectives. Expert Opinion on Drug Discovery, 2021. 16(9): p. 949–959.

- Yang, X., et al., A live-cell image-based machine learning strategy for reducing variability in PSC differentiation systems. Cell Discovery, 2023. 9(1): p. 53.

- Wiggins, L., et al., The CellPhe toolkit for cell phenotyping using time-lapse imaging and pattern recognition. Nature Communications, 2023. 14(1): p. 1854.

- Carreras-Puigvert, J. and O. Spjuth, Artificial intelligence for high content imaging in drug discovery. Current Opinion in Structural Biology, 2024. 87: 102842.

(2)

(2)  (0)

(0)